The AI Image Generation Landscape: A Deep Dive

Unveiling the Future: The Cutting-Edge Landscape of AI Image Generation

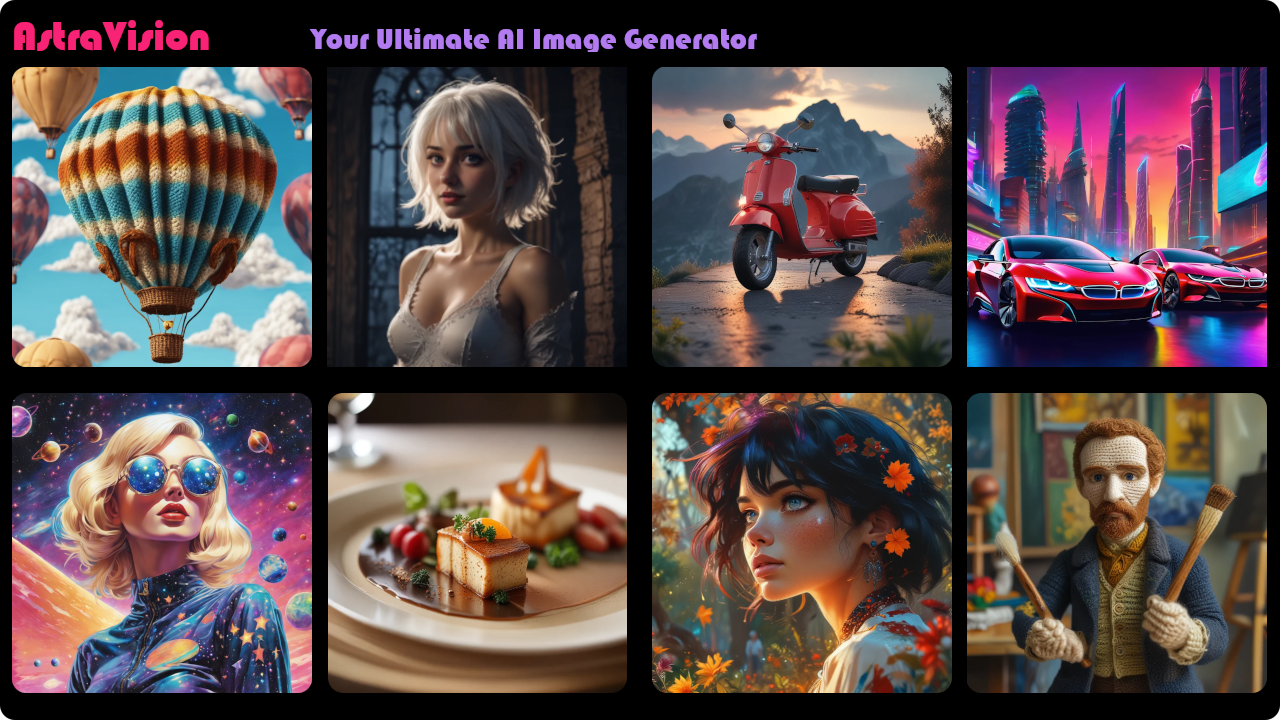

The landscape of AI image generation is a fascinating blend of cutting-edge technology and boundless creativity. As AI continues to evolve, it transforms how we create and interact with visual content. This blog post explores the main players, techniques, and future trends in AI image generation, with a particular focus on Stable Diffusion and DALL-E.

Main Players in AI Image Generation

Several organizations and platforms lead the AI image generation field, each contributing unique advancements and capabilities:

OpenAI

OpenAI has been a pioneer in the AI space, with DALL-E and DALL-E 2 being among their most significant contributions. DALL-E is renowned for generating highly detailed and imaginative images from textual descriptions, pushing the boundaries of what's possible in AI-driven creativity.

Google Research

Google has also made substantial strides in AI image generation through projects like DeepDream and BigGAN. These tools have demonstrated the potential of AI to create surreal and highly detailed images, often blurring the line between reality and fantasy.

Stability AI

Stability AI, with its development of the Stable Diffusion model, has introduced a highly efficient and accessible approach to image generation. Stable Diffusion stands out for its ability to generate high-quality images with fewer resources, making advanced AI capabilities more accessible to a broader audience.

NVIDIA

NVIDIA's research into generative adversarial networks (GANs) has produced groundbreaking results, such as StyleGAN and GauGAN. These tools have set new standards in generating photorealistic images and artistic renderings.

AI Image Generation Techniques

Several techniques drive the current state of AI image generation:

Generative Adversarial Networks (GANs)

GANs are a class of machine learning frameworks designed to generate new data similar to a given dataset. GANs consist of two neural networks, the generator and the discriminator, which work together to produce realistic images. This adversarial process results in highly detailed and realistic outputs.

Variational Autoencoders (VAEs)

VAEs are another type of generative model that learns to encode data into a latent space and then decode it back into the original space. VAEs are particularly useful for generating variations of images and interpolating between different images.

Diffusion Models

Diffusion models, like Stable Diffusion, generate images by iteratively refining a noisy initial image. These models are known for their stability and efficiency, making them ideal for generating high-quality images with less computational power.

Stable Diffusion and DALL-E

Stable Diffusion

Stable Diffusion is a groundbreaking model developed by Stability AI. It excels in generating high-quality images efficiently, making advanced AI capabilities more accessible. The model's stability and scalability have made it a favorite among researchers and developers.

DALL-E

DALL-E, developed by OpenAI, has captured the imagination of many with its ability to generate creative and detailed images from textual descriptions. DALL-E 2, the latest iteration, has further enhanced the quality and diversity of generated images, setting new benchmarks in the field.

The Future of AI Image Generation

The future of AI image generation is bright and full of potential. Here are some trends and advancements to watch for:

Improved Realism and Detail

Future models will continue to improve in terms of realism and detail, making it increasingly difficult to distinguish AI-generated images from real photographs.

Personalization and Customization

AI will become more adept at generating personalized and customized images, allowing users to tailor visuals to their specific needs and preferences.

Integration with Augmented Reality (AR) and Virtual Reality (VR)

AI-generated images will play a significant role in AR and VR, creating immersive and interactive environments that respond dynamically to user inputs.

Ethical and Responsible AI

As AI capabilities grow, so will the emphasis on ethical and responsible use. Ensuring that AI-generated content is used in ways that respect privacy, consent, and authenticity will be crucial.

Advances in Research

Ongoing research in areas like multimodal learning, zero-shot generation, and unsupervised learning will continue to push the boundaries of what AI can achieve in image generation.

State-of-the-Art Research

Current research in AI image generation focuses on several key areas:

Multimodal Learning

Integrating text, audio, and image data to create richer and more contextually aware models.

Zero-Shot and Few-Shot Learning

Enabling models to generate high-quality images from minimal training data, increasing their versatility and applicability.

Unsupervised Learning

Developing models that can learn and generate images without explicit labeled data, making AI training more efficient and scalable.

Conclusion

AI image generation is an exciting and rapidly evolving field, with numerous players and techniques contributing to its advancement. Stable Diffusion and DALL-E are at the forefront of this revolution, pushing the boundaries of creativity and technology. As research and technology continue to advance, the future of AI image generation promises even more innovative and transformative possibilities.

Step into the world of AI image generation and witness the dawn of a new era in visual creativity!

Comments (0)

No comments found